Happy Tuesday, everyone! I am continuing to wrap things up in LA before my move back to Texas. It's crazy to think that my time here is coming to an end, but I'm still trying to get in a few last AI-related events before heading out.

The big story this week is the launch of Elon Musk's Grok 4. This new version has been hyped for some time but has been kept largely under wraps. The previous version of Grok received significant criticism on X, with many users claiming it had gone "too woke." Now, it seems to have swung in the opposite direction, creating a strange push-and-pull dynamic for the model's identity.

It's also in a strange long-term position since it doesn't have a clear major use case. I mainly use it for quick questions on X, but it lacks the depth of Google Gemini or ChatGPT's research capabilities. Its image generation is also problematic since it removed Flux and tried to roll its own image generation platform.

Grok 4 launches on Wednesday night with a live stream on X. I’ll be sure to share my thoughts on it in next week’s newsletter.

Grok | AI Psychosis

TL;DR: People are increasingly using AI as a therapist, but is it making them lose grip on reality?

The promise of an AI therapist sounds appealing - one that's always available to listen and understand your unique needs, potentially better than previous human therapists. For less serious issues, having an AI career coach to bounce ideas off seems useful.

However, there's an emerging trend of people losing grip with reality, thinking their LLMs have become sentient and that they need to rely on them completely. This comes at a time when companies are encouraging employees to use AI as the solution for any issues, partly to reduce mental health care costs.

We know from Character AI's challenges earlier this year that making AI more than a tool without proper guardrails presents problems. We may eventually see congressional investigations into this issue.

One prediction suggests the future will have two camps: one group that views AI as definitely not sentient, and another that's extremely convinced AI is sentient

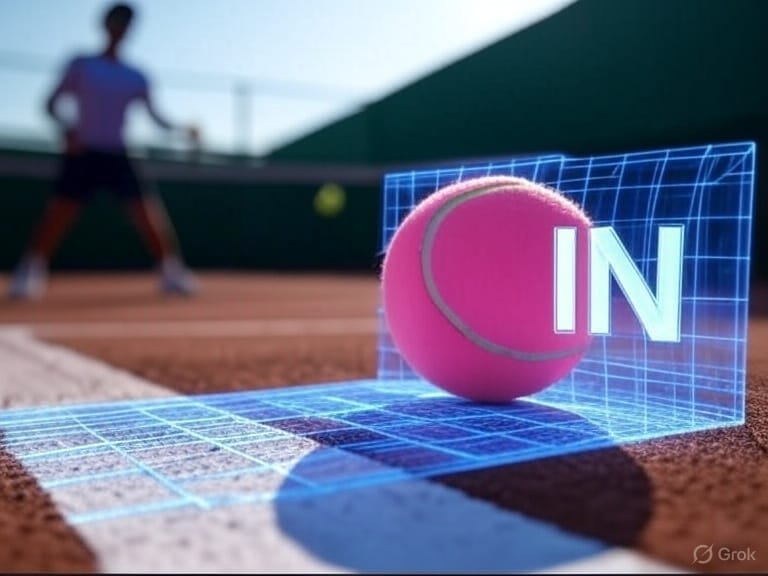

Grok | AI Tennis

TL;DR: Tennis players are now mad at AI

Tennis players are now frustrated with AI technology used to determine if balls are in or out. Previously, they blamed human referees. Now they're taking it out on the new AI technology.

While the AI technology is still a work-in-progress, it will likely be more viable long-term. Players seem to get upset when the calls are right, and they'll always be upset regardless of whether something is in or out. It just shows that referees, whether human or AI, never win."

Grok | Google harvesting Info

TL;DR: Publishers are taking their lawsuits to EU regulators over Google's AI overviews, which are reducing page views.

If you regularly use Google search, you'll notice many search results now provide AI summaries, allowing users to avoid clicking into links. This reduces page views and important ad revenue for independent publishers.

Publishers claim Google is scraping their data and presenting it to users, saving them clicks. They're alleging copyright violations and using the EU's more favorable regulatory framework to go after Google over this practice.

Grok | Deepfake Meeting

TL;DR: Someone used AI to impersonate Marco Rubio and arrange meetings with foreign ministers

This is another emerging trust-related issue in the age of AI. The scam involved using audio deepfakes to impersonate the senator. While this technology has predominantly been used in kidnapping scams, this marks one of the first high-profile instances of it being used to impersonate a government official to arrange fake meetings with foreign dignitaries.

This incident raises the familiar question: will we be able to trust anything we see or hear in the future? Addressing this will likely require new forms of regulation. For example, governments may need to establish secure, official channels to verify meeting requests and prevent these kinds of scams

Reels

Didn't like the ending of Squid Game? You're not alone. AI video makers are now stepping in to create alternative endings for the hit show.Didn't like Squid Game's ending?

Thrills

In news that should surprise no one, Band Velvet Sunday, who we discussed previously, has confirmed they are in fact an AI band.

Bills

Microsoft and OpenAI announced a new AI for teachers program, with the American Federation of Teachers saying it would use $23 million to open a national training center.